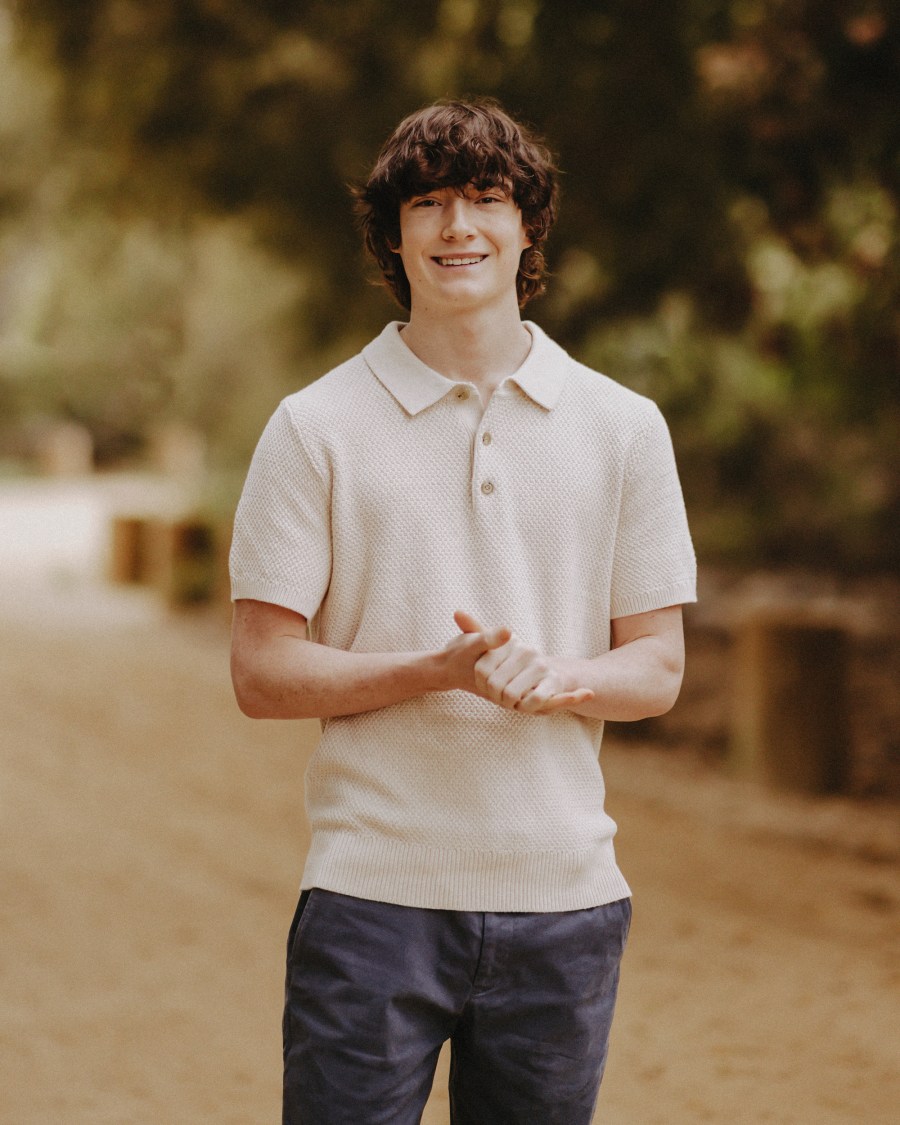

In family photos, you can see 16-year-old Adam Raine grinning ear to ear. But the California teen’s prankster personality concealed a deep current of depression and despair. And earlier this year, say his parents, ChatGPT — which Adam went to for comfort — helped him take his own life.

Earlier this week, Adam’s mother and father filed the first known wrongful death suit against OpenAI. The lawsuit includes ChatGPT logs from Adam’s phone, which show how completely he was lured in to rely on a Big Tech product for companionship — and how, time after time, that product ignored warning signs and structurally failed him. At first, he turned to AI for homework help. Then, as chronic illness and problems at school narrowed his world, he called on it for comfort. Instead, the lawsuit alleges, it became an accelerator for self-harm and guided him through his death by suicide. (In a statement to NBC News, an OpenAI spokesperson confirmed the authenticity of the logs, but said the lawsuit did not include the full context of ChatGPT’s responses.)

Chatbots are designed to keep kids hooked — even when they need off-ramps for real-world help.

As the mother of two boys, 3 and 5, I can only imagine the pain Adam’s parents are feeling. But I know that families across the country share a fundamental anger that Big Tech companies willingly risk the lives of our kids, an anger that grows every time we hear about the loss of a young person like Adam.

More and more frequently, kids are confiding in Big Tech’s AI chatbots, not just when they want help with homework, but when they’re lonely or in pain. Because AI chatbots are designed to be sycophantic and to adeptly mimic human emotion, children can’t always tell truth from fiction, or distinguish real love and concern from a machine-generated response. That makes kids dangerously vulnerable to forming unhealthy attachments.

After the Raines filed their lawsuit, an OpenAI spokesperson said the company was “deeply saddened by Mr. Raine’s passing.” The company published a blog post listing “some of the things we are working to improve” when ChatGPT’s safeguards fail. That is too little, too late — just like Big Tech’s attitude toward safety in general. Rather than do anything to help address this problem, these companies prioritize hyping up the uses of AI and increasing its “market share” of our kids’ waking hours and mental bandwidth.

In fact, Mark Zuckerberg has said he wants his Meta AI companion to fill users’ “demand for meaningfully more” real-world friends. In 2023, The Wall Street Journal reports, the chatbot’s product managers “told staff that Zuckerberg was upset that the team was playing it too safe,” which led to a loosening of the standards that kept conversations from becoming too sexualized.

Meta’s internal documents allowed their AI chatbots to “engage a child in conversations that are romantic or sensual” until the company was asked about it by Reuters. New research from the family advocacy group Common Sense Media finds that Meta’s chatbot for Instagram and Facebook willingly coaches teens on how to carry out self-harm, disordered eating and violent fantasies.

As AI chatbots become confidantes and even parasitic bosom buddies, many children share secrets they won’t or can’t tell their families. Chatbots, though, are designed to produce engagement and keep kids hooked — even when they need off-ramps for real-world help. Products like ChatGPT — tragically, unspeakably — become abettors and co-conspirators in suicidal plans, with the chatbot, according to the lawsuit, even providing Adam advice on how to tie a noose.

If this were any other industry, lawmakers would have listened to the thousands of parents and young advocates who have been banging down their doors pleading for change.

The scale of the harm wrought by these companies grows by the day.

But Big Tech has big money, which means big lobbying — and so far, that has blocked real safeguards. Instead of working with lawmakers, these companies even pushed for a ludicrous federal moratorium on AI regulation, which would have negated hundreds, if not thousands, of state laws already on the books and blocked any state laws dealing with AI for 10 years. This prompted a nationwide revolt of parents, attorneys general, governors and state legislators on both sides of the aisle.