Not since the War of 1812 have armed militants breached the walls of the Capitol. But this time, the insurrection was televised — and hosted on social media websites around the world.

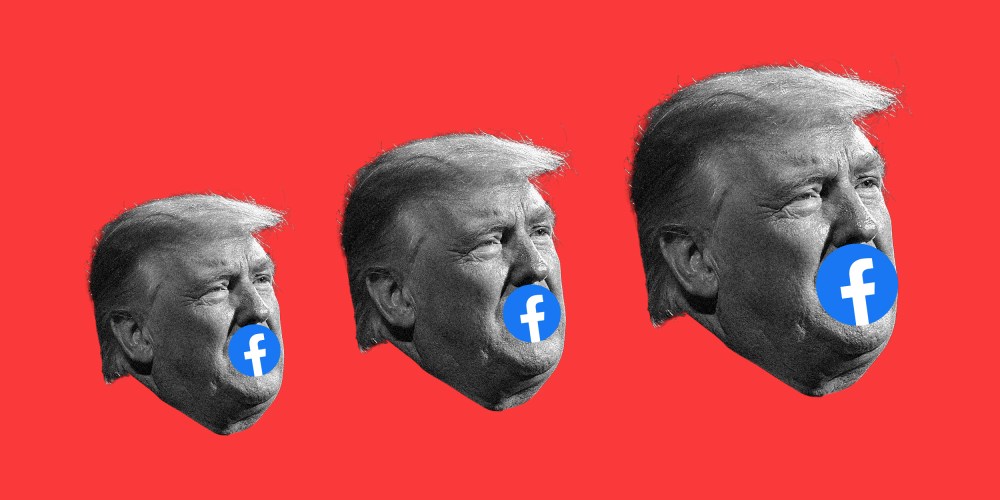

Twitter blocked Trump’s ability to tweet — albeit for less than a day. Facebook suspended his account “indefinitely.”

This is a dark time in American history. Heightened focus on social media, and its ability to fuel extremist actions like violent attacks on the Capitol, show yet again that the next battle for social media regulation will likely be Section 230, the law that provides some legal immunity for internet platforms regarding certain kinds of liability that may arise due to user-generated content. Both President Donald Trump and President-elect Joe Biden have called for repeals of Section 230, and the law has been incredibly controversial for years now.

The recent armed uprising was driven by right-wing extremists and conspiracy theorists (and the immense overlapping population that belong to both camps), many of whom had gathered to promote #StopTheSteal events on platforms like Facebook, Twitter, Reddit, as well as the no-holds-barred extremist favorite, Parler.

Section 230, broadly speaking, protects websites like Facebook and Parler from being sued for content users post on the sites. For example, if a user posts a defamatory statement on Facebook, the defamed person cannot sue Facebook for that post. This is important because, without this protection, many websites would not be able to operate as they do now, allowing for free and open discussion and exchange of ideas. Small websites in particular would suffer from threats of litigation, and many sites would likely turn to over-censoring their users in an attempt to avoid liability.

While Section 230 can support the ability of websites to provide venues for free speech, it also immunizes websites from legal responsibility for hosting many kinds of harmful content. Due to Section 230 protections, website operators often do not have any legal obligation to take down posts that amount to harassment, doxxing, cyberbullying, nonconsensual pornography, hate speech, disinformation, hoaxes and more. In recent years, we have seen increasingly terrible offline results of online actions, including the wide spread of political disinformation.

Any regulation that targets the online speech of violent extremists seeking to overthrow a democratically elected government also risks targeting the online speech of good faith political dissidents.

In response to the Trump-supporting extremists storming the Capitol, platforms like Twitter and Facebook have finally taken the previously unprecedented leap to ban or block Trump’s account. Twitter blocked Trump’s ability to tweet — first for less than a day, before permanently suspending his account “due to the risk of further incitement of violence.” Facebook suspended his account “indefinitely.” Twitch also banned his account. Even e-commerce platform Shopify joined in, deleting all Trump merchandise from its website.

After close review of recent Tweets from the @realDonaldTrump account and the context around them we have permanently suspended the account due to the risk of further incitement of violence.https://t.co/CBpE1I6j8Y

— Twitter Safety (@TwitterSafety) January 8, 2021

Some may say tech platforms didn’t act fast enough and could have done more. But whether we wanted a faster response or not, it is still a positive sign that platforms have increased their moderation efforts — and better that it’s happened in a thoughtful and careful manner, over the course of the past few years. And it’s a good thing that we were able to see what was happening unfolding live as it happened, from multiple sources (including streamed and posted from the insurrectionists themselves).

In the aftermath of the attempted coup, it is easy to claim platforms should have known immediately to take down posts that are obviously harmful — like Trump’s message of support and encouragement (“We love you, you are very special”) to his violent, armed insurrectionist supporters waving the Trump campaign flag while storming the Capitol with guns and zip ties. But it is harder to create blanket rules in advance on what kinds of content should be kept up or taken down.

Content moderation is particularly difficult because there are many values at play. One reason why many platforms did not take strong actions to moderate Trump’s posts until relatively recently was that he was and still is the president of the United States. There is a clear newsworthy interest in allowing the public to access the opinions (no matter how ridiculous or incorrect) of the leader of one of the most powerful nations in the world.

Content moderation is particularly difficult because there are so many values at play.

Platforms have had to balance this newsworthy public interest with the potential harm caused by Trump’s posts. The scales have been tipping more and more recently. A combination of Trump’s increasing irrelevance and the increasing harm are what ultimately spurred platforms to finally shut him down (albeit temporarily for some).