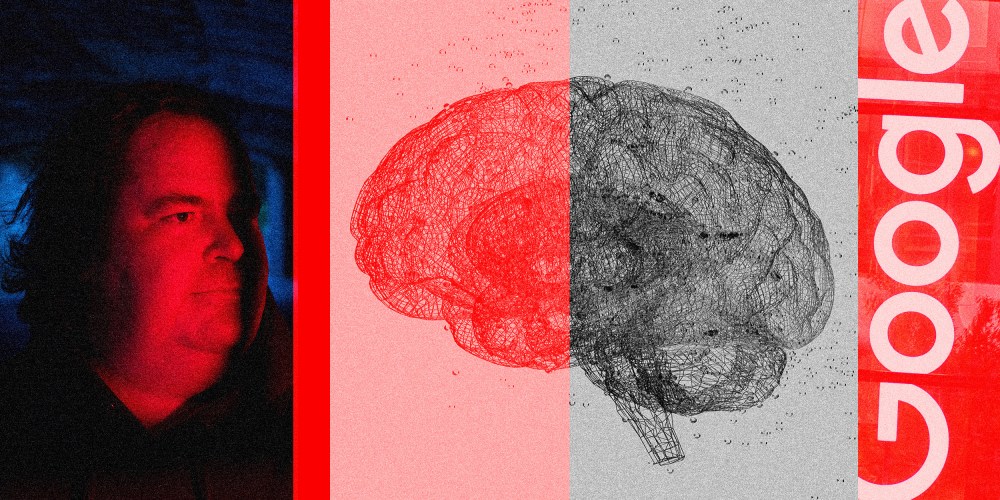

Has Google created a sentient computer program?

A senior software engineer at Google’s Responsible AI organization certainly seems to think so. Blake Lemoine told colleagues for months that he thought the company’s Language Model for Dialogue Applications (LaMDA), an extremely advanced artificially intelligent chatbot, had achieved consciousness. After he tried to obtain a lawyer to represent LaMDA and complained to Congress that Google was behaving unethically, Google placed him on paid administrative leave on Monday for violating the company’s confidentiality policy.

Google has said that it disagrees with Lemoine’s claims. “Some in the broader AI community are considering the long-term possibility of sentient or general AI, but it doesn’t make sense to do so by anthropomorphizing today’s conversational models, which are not sentient,” the company said in a statement. Many scholars and practitioners in the AI community have said similar things in recent days.

But reading over conversations between Lemoine and the chatbot, it’s hard not to be impressed by its sophistication. Its responses often sound just like a smart, engaging human with opinions on everything from Isaac Asimov’s third law of robotics to “Les Misérables” to its own purported personhood (the chatbot said it was afraid of being turned off). It raises questions of what it really would mean for artificial intelligence to achieve or surpass human-like consciousness, and how we would know when it did.

We humans are very prone to interpreting text that sounds human as having an agent behind it.

To get a better sense of why Lemoine believed there was “a ghost in the machine” and what a sentient robot might actually look, I decided to call up Melanie Mitchell, the Davis Professor of Complexity at the Santa Fe Institute, and the author of “Artificial Intelligence: A Guide for Thinking Humans.”

Our conversation, edited for length and clarity, follows.

Zeeshan Aleem: What does the word sentient mean, in the context of artificial intelligence? Are there agreed upon definitions or criteria for measuring it? It seems learning, intelligence, the ability to suffer and self-awareness are distinct, even if overlapping, capacities.

Melanie Mitchell: There’s no real agreed-upon definition for any of these things. Not only for artificial intelligence, but for any system at all. The technical definition might be having feelings, having awareness and so on. It’s usually used synonymously with consciousness, which is another one of those kinds of ill-defined terms.

But people have kind of a sense, themselves, that they are sentient; you feel things, you feel sensations, you feel emotions, you feel a sense of yourself, you feel aware of what’s going on all around you. It’s kind of a colloquial notion that philosophers have been arguing about for centuries.

In the real world, the question of sentience comes up, for example, with respect to other nonhuman animals, how sentient they are, is it OK to cause them pain and suffering? No one really has an agreed-upon definition that says, “At this level of intelligence, something is sentient.”

Based on the available information about and transcripts of LaMDA, do you believe it is sentient — and why or why not?

Mitchell: I do not believe it is sentient, by any reasonable meaning of that term. And the reason is because I understand pretty well how the system works; I know what it’s been exposed to, in terms of its training, I know how it processes language. It’s very fluent, as are all these very large language systems that we’ve seen recently. But it has no connection to the world, besides the language that it’s been processing that it’s been trained on. And I don’t think it can achieve anything like self-awareness or consciousness in any meaningful sense, just by being exposed to language.

The other thing is Google hasn’t really published a lot of details about this system. But my understanding is that it has nothing like memory, that it processes the text as you’re interacting with it. But when you stop interacting with it, it doesn’t remember anything about the interaction. And it doesn’t have any sort of activity at all when you’re not interacting with it. I don’t think you can have sentience, without any kind of memory. You can’t have any sense of self without a kind of memory. And none of these large language processing systems have at least that one necessary condition, which may not be sufficient, but it’s certainly necessary.

How does this system work?

Mitchell: It’s a large neural network, which is very roughly inspired by the way neurons are connected to other neurons in the brain. It has these artificial or simulated neuron-like pieces that are connected to others with numerical weights attached to them. This is all in software, it’s not hardware. And it learns by being given text, like sentences or paragraphs, with some part of the text blanked out. And it has to predict what words should come next.

At the beginning, it doesn’t — it can’t — know which words just come next. But by being trained on billions and billions of human-created sentences, it learns, eventually, what kinds of words come next, and it’s able to put words together very well. It’s so big, it has so many simulated neurons and so on, that it’s able to essentially memorize all kinds of human-created text and recombine them, and stitch different pieces together.

Now, LaMDA has a few other things that have been added to it. It’s learned not only from that prediction task but also from human dialogue. And it has a few other bells and whistles that Google gave it. So when it’s interacting with you, it’s no longer learning. But you put in something like, you know, “Hello, LaMDA, how are you?” And then it starts picking words based on the probabilities that it computes. And it’s able to do that very, very fluently because of the hugeness of the system and the hugeness of the data it’s been trained on.

What do we know about Lemoine and how could a specialist be duped by a machine that he’s trained to assess? It raises broader questions of how hard it must be to assess the capacity of something that’s designed to communicate like a human.

Mitchell: I don’t know much about Lemoine, except what I’ve read in the media, but I didn’t get the impression that he was an AI expert. He’s a software engineer, whose task was to converse with this system and figure out ways to make it less racist and sexist, which these systems have problems with because they’ve learned from human language. There’s also the issue that, as I read in The Washington Post, that he’s a religious person, he’s actually an ordained priest in some form of Christianity and says himself that his assessment of the system was coming from his being a priest, not from any scientific assessment. None of the other people at Google who were looking at this system felt that it was sentient in any way.

That being said, you know, experts are humans, too. They can be fooled. We humans are very prone to interpreting text that sounds human as having an agent behind it, some intelligent conscious agent. And that makes sense, right? Language is a social thing, it’s communication between conscious agents who have reasons for communicating. So we assume that’s what’s happening. People are fooled even by much simpler systems than LaMDA. It’s been happening since even the beginning of AI.

It’s not hard to fool humans, unless they’re suspicious.

Could you share any examples?

Mitchell: The canonical example is the ELIZA program, which was created in the 1960s by Joseph Weizenbaum. It was the dumbest chat bot possible. It was imitating a psychiatrist, and it had some templates. So you would say, “I’ve been having some problems with my mother.” And it would say, “Tell me about the problems with your mother.” Its template was, “tell me about the blank.”

People who talked to it felt deeply that there was an actual understanding person behind it. Weizenbaum was so alarmed by the potential of people being fooled by AI that he wrote a whole book about this in the 1970s. So it’s not a new phenomenon. And then we’ve had all kinds of chat bots that have passed so-called Turing tests [a test of a machine’s ability to exhibit intelligence equivalent to, or indistinguishable from, that of a human] that are much less complicated than LaMDA. We have people being fooled by bots on Twitter, or Facebook and other social media sites.